Multi-Task Clothing Attribute Classification

As part of Team in the Hology v7 Data Mining Competition, I worked alongside Adzka Bagus Juniarta to develop a robust solution for classifying clothing attributes. The challenge involved predicting two key attributes for each image: "jenis" (type: kaos or not) and "warna" (color: red, yellow, blue, black, or white). With 777 training images and a focus on accuracy via Exact Match Ratio (EMR), our approach leveraged multi-task learning to efficiently handle both classifications simultaneously. This project showcased my skills in data preprocessing, model architecture design, and training optimization using PyTorch.

We started by setting up a reproducible environment in Google Colab, mounting Google Drive for data access, and importing essential libraries like Pandas for data handling, OpenCV for image processing, and PyTorch for model building. Random seeds were fixed across Python, NumPy, and PyTorch to ensure consistent results, and we enabled deterministic GPU behavior.

Next, we loaded the training labels from a CSV file and inspected the class distributions. For "jenis," kaos (class 0) dominated with about 61% of the images (476 out of 777). For "warna," black (class 3) was the most common at 30.1% (234 images), followed by blue, white, yellow, and red. This balanced distribution informed our strategy, as there was no severe imbalance to address.

To prepare the data, we created a custom ClothingDataset class inheriting from PyTorch's Dataset. This handled loading images (supporting both JPG and PNG formats), applying transformations, and returning labels for training or just images for testing. We split the data into 70% training and 30% validation sets.

Data augmentation was key to improving generalization, given challenges like multiple clothing items per image, distracting backgrounds, varying lighting, and intricate poses. For training, we used transformations including random flips, rotations, crops, color jitter, and normalization to ImageNet standards. Test transformations were simpler, focusing on resizing and normalization.

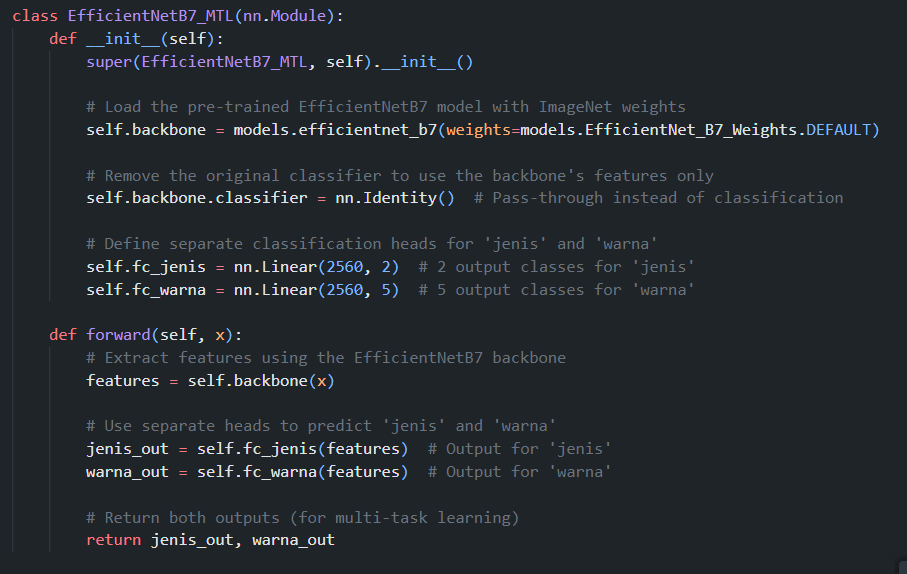

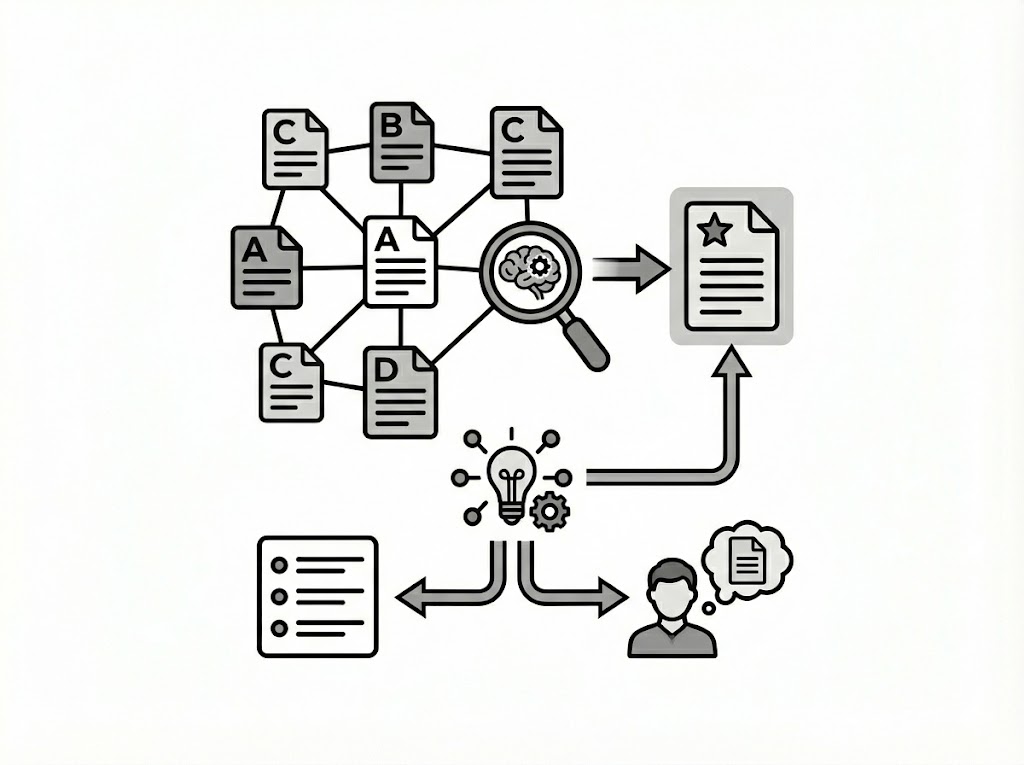

For the model, we chose EfficientNetB7 as the backbone after experimenting with alternatives like ResNet and DenseNet—it delivered the best EMR. We implemented a multi-task learning (MTL) setup by replacing the original classifier with two separate linear heads: one for "jenis" (2 classes) and one for "warna" (5 classes). We fine-tuned all layers using pre-trained ImageNet weights, which outperformed freezing lower layers.

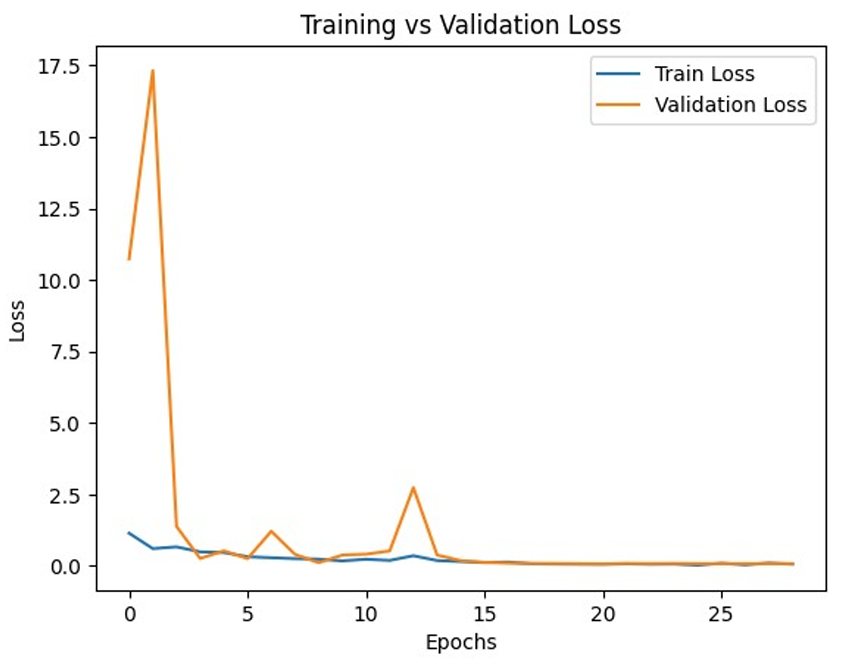

Training occurred on GPU with CrossEntropyLoss for each task (summed for the total loss), Adam optimizer (initial LR 0.001), and a ReduceLROnPlateau scheduler. We implemented a training loop with early stopping (patience=8) to prevent overfitting. Over 29 epochs, the model converged, with validation loss dropping significantly and EMR reaching 0.9744 locally.

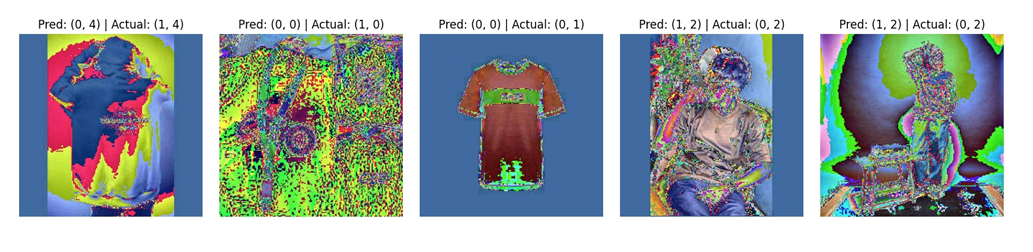

Evaluation on the validation set revealed only 5 misclassifications out of 195 images (EMR 0.9744). We visualized these to analyze errors—mostly in color due to lighting variations or type due to ambiguous poses.

Finally, we generated predictions on the test set (IDs 778-1111) and created a submission CSV. Our model scored 0.97 EMR on the public leaderboard, validating our approach.

This project honed my expertise in computer vision and multi-task learning, proving the power of transfer learning and careful augmentation in real-world datasets.

Statistic & Data Analysis

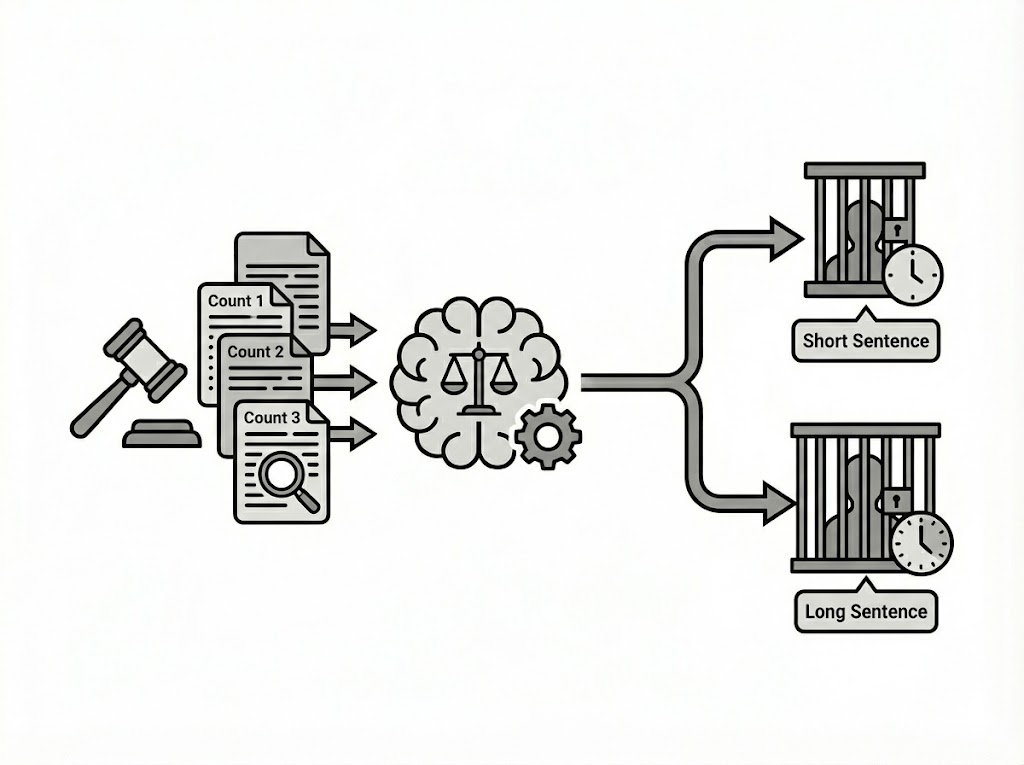

Predicting Judicial Sentences: Analyzing Indonesian Court Verdicts with NLP and ML

DS/ML/AI Engineering

Driver Drowsiness Classification

Statistic & Data Analysis