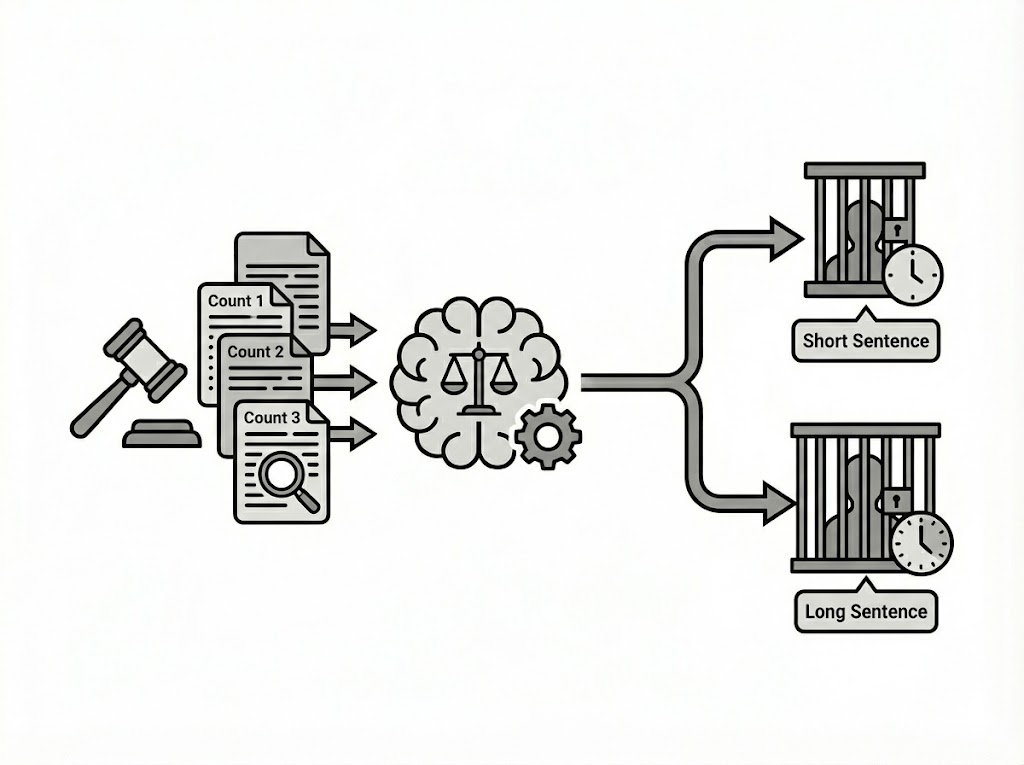

Predicting Judicial Sentences: Analyzing Indonesian Court Verdicts with NLP and ML

The Indonesian legal system produces extensive textual data in court verdicts from the Mahkamah Agung (Supreme Court), but deriving quantitative insights—such as predicted sentence lengths ("lama hukuman (bulan)")—is hindered by challenges like inconsistent formatting, repetitive boilerplate language, typos, and rare outliers (e.g., life sentences coded as 88888). This project aimed to create an accurate regression model using over 16,500 training samples and 6,600 test samples to forecast sentence durations based solely on verdict texts. The solution has potential applications in legal analytics, aiding in pattern recognition for sentencing trends, bias detection, or automated case summarization, while demonstrating proficiency in handling real-world, unstructured data.

Data Preparation and Cleaning

The datasets were loaded from Parquet files in a Google Colab environment, containing columns for document IDs, raw text, and target sentence lengths in the training set. Initial steps included mounting Google Drive for file access and setting random seeds for reproducibility across libraries like NumPy, PyTorch, and others.

Outlier handling was critical: A single instance of a life sentence (88888) was removed to prevent model skew, reducing the training set to 16,571 rows. Text preprocessing involved a sophisticated pipeline using regular expressions (regex) to normalize whitespace, remove boilerplate blocks (e.g., disclaimers, footers, and headers like "Mahkamah Agung Republik Indonesia"), and eliminate redundant lines. This included compiling patterns for anchored deletions to bound removals to specific sections, ensuring only relevant content (e.g., charges, considerations, and rulings) remained. Additional normalization stripped newlines, standardized spaces, and dropped original text columns post-cleaning for efficiency. Libraries such as pandas, re, and unicodedata facilitated this, resulting in cleaner, more model-friendly text data.

Feature Engineering

To transform the unstructured text into predictive features, a rule-based engineering pipeline was applied. This extracted structured elements like word counts, sentence lengths, and keyword-based indicators (e.g., counts of terms related to charges like "dakwaan" or rulings like "amar"). High-signal text segments—such as prosecution demands ("tuntutan"), verdicts ("amar"), and considerations ("menimbang")—were isolated for targeted analysis.

Further enhancements included log transformations for skewed numerical features (e.g., log of word counts to handle variance), binary flags (e.g., presence/absence of specific legal phrases), and interaction/ratio features (e.g., ratio of punishment-related words to total words). Text vectorization used TF-IDF with TruncatedSVD for dimensionality reduction, capturing semantic representations. Mutual information regression and permutation importance ranked features, followed by a cross-validation sweep to select the optimal subset (e.g., testing various K values for top-ranked features). This resulted in an expanded feature set (up to 45 columns in training), balancing interpretability and predictive power.

Modeling Approach

A hybrid, stacked ensemble was employed to leverage both traditional ML and deep learning strengths. Base "expert" models included gradient boosters like LightGBM (LGBM), XGBoost, and CatBoost for handling numerical and categorical features efficiently; RandomForestRegressor for non-linear interactions; and Ridge regression on raw text embeddings for textual focus. Deep learning components used PyTorch with transformers (e.g., AutoTokenizer and AutoModel) to generate contextual embeddings, trained with AdamW optimizer, GradScaler for mixed precision, and linear warmup scheduling.

Training utilized adaptive stratified 5-fold cross-validation (KFold with custom binning for imbalanced targets) to ensure robust generalization, minimizing RMSE. Meta-features from base model predictions were stacked, and a meta-ensemble (combining RidgeCV, ElasticNet, LassoCV, HuberRegressor, and LGBM as meta-learners) learned optimal weights via non-negative least squares (NNLS). This diversity reduced overfitting, with techniques like early stopping, feature selection via Spearman correlation, and isotonic regression for calibration.

Results and Insights

The stacked ensemble achieved competitive RMSE scores through CV, with final meta-weights emphasizing Ridge (0.386), Huber (0.198), and LGBM_meta (0.416) for balanced contributions. Feature importance highlighted legal keywords and text lengths as key predictors, revealing insights into sentencing factors (e.g., severity indicators in "tuntutan"). The model handled edge cases like varying document lengths and noisy inputs effectively, demonstrating resilience.

This project underscores the integration of NLP (e.g., regex, TF-IDF, transformers) with ensemble ML for domain-specific regression tasks. Future extensions could include fine-tuned LLMs for better semantic understanding or deployment as a web tool for legal professionals. It showcases skills in end-to-end data science pipelines, from raw data wrangling to optimized modeling, applicable to other text-heavy domains like healthcare or finance.

DS/ML/AI Engineering

Motion Matters: Human Fall Detection Classification for Safety Insight

Statistic & Data Analysis

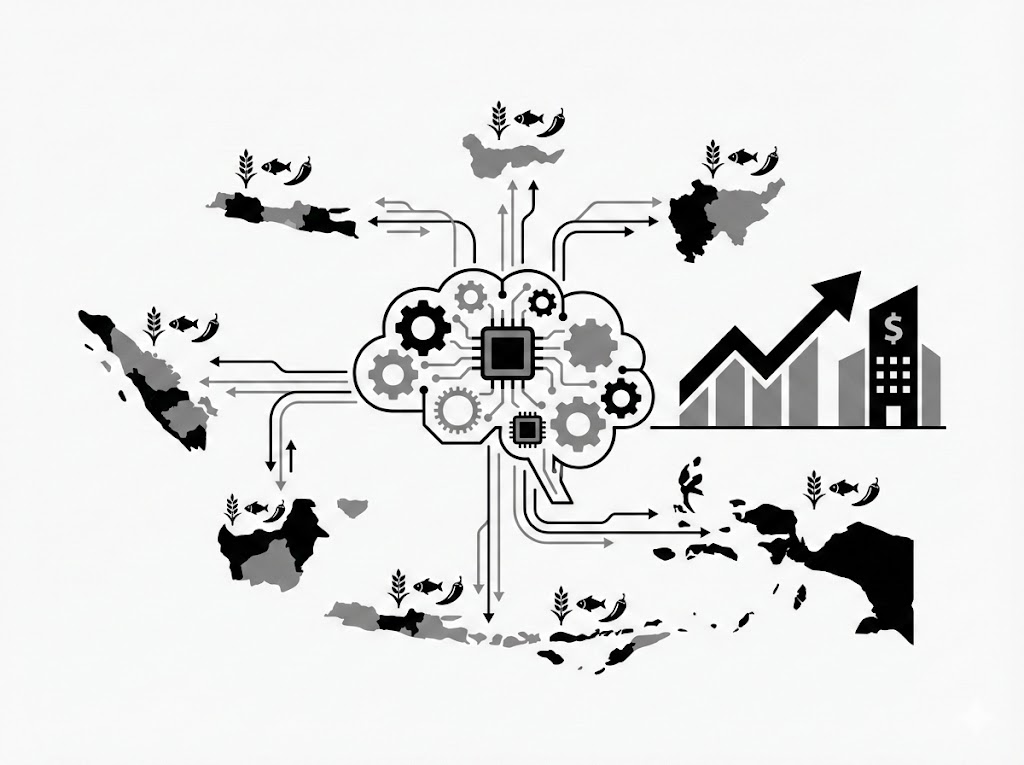

Ensemble Learning for Multi-Regional Food Price Prediction in Indonesia

Statistic & Data Analysis